이 포스트는 Github 접속 제약이 있을 경우를 위한 것이며, 아래와 동일 내용을 실행 결과와 함께 Jupyter notebook으로도 보실 수 있습니다.

You can also see the following as Jupyter notebook along with execution result screens if you have no trouble connecting to the Github.

7-1. Test Data for CNN MNIST

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras.utils import to_categorical

import numpy as np

import matplotlib.pyplot as plt

import os

tf.random.set_seed(777)

print(tf.__version__)

print(keras.__version__)

mnist = keras.datasets.mnist

class_names = ['0', '1', '2', '3', '4', '5', '6', '7', '8', '9']

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()

train_images = train_images.astype(np.float32) / 255.

test_images = test_images.astype(np.float32) / 255.

train_images = np.expand_dims(train_images, axis=-1)

test_images = np.expand_dims(test_images, axis=-1)

train_labels = to_categorical(train_labels, 10)

test_labels = to_categorical(test_labels, 10)

7-2. Modeling

- 아래 3가지 Type 중 한가지 선택

7-2-1. Sequential Model

def create_model():

model = keras.Sequential()

model.add(keras.layers.Conv2D(filters=32, kernel_size=3, activation=tf.nn.relu, padding='SAME',

input_shape=(28, 28, 1)))

model.add(keras.layers.MaxPool2D(padding='SAME'))

model.add(keras.layers.Conv2D(filters=64, kernel_size=3, activation=tf.nn.relu, padding='SAME'))

model.add(keras.layers.MaxPool2D(padding='SAME'))

model.add(keras.layers.Conv2D(filters=128, kernel_size=3, activation=tf.nn.relu, padding='SAME'))

model.add(keras.layers.MaxPool2D(padding='SAME'))

model.add(keras.layers.Flatten())

model.add(keras.layers.Dense(256, activation=tf.nn.relu))

model.add(keras.layers.Dropout(0.4))

model.add(keras.layers.Dense(10))

return model

7-2-2. Functional Model

def create_model():

inputs = keras.Input(shape=(28, 28, 1))

conv1 = keras.layers.Conv2D(filters=32, kernel_size=[3, 3], padding='SAME', activation=tf.nn.relu)(inputs)

pool1 = keras.layers.MaxPool2D(padding='SAME')(conv1)

conv2 = keras.layers.Conv2D(filters=64, kernel_size=[3, 3], padding='SAME', activation=tf.nn.relu)(pool1)

pool2 = keras.layers.MaxPool2D(padding='SAME')(conv2)

conv3 = keras.layers.Conv2D(filters=128, kernel_size=[3, 3], padding='SAME', activation=tf.nn.relu)(pool2)

pool3 = keras.layers.MaxPool2D(padding='SAME')(conv3)

pool3_flat = keras.layers.Flatten()(pool3)

dense4 = keras.layers.Dense(units=256, activation=tf.nn.relu)(pool3_flat)

drop4 = keras.layers.Dropout(rate=0.4)(dense4)

logits = keras.layers.Dense(units=10)(drop4)

return keras.Model(inputs=inputs, outputs=logits)

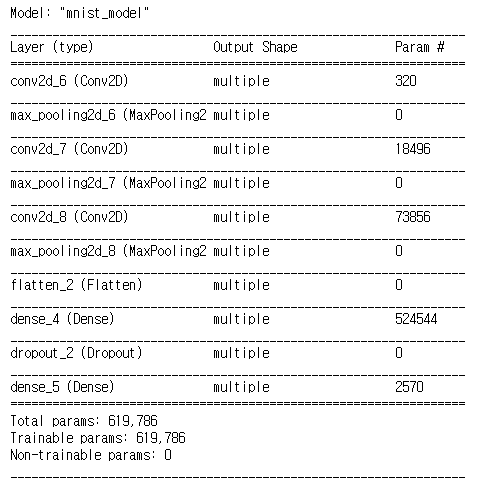

7-2-3. Class Model

class MNISTModel(tf.keras.Model):

def __init__(self):

super(MNISTModel, self).__init__()

self.conv1 = keras.layers.Conv2D(filters=32, kernel_size=[3, 3], padding='SAME', activation=tf.nn.relu)

self.pool1 = keras.layers.MaxPool2D(padding='SAME')

self.conv2 = keras.layers.Conv2D(filters=64, kernel_size=[3, 3], padding='SAME', activation=tf.nn.relu)

self.pool2 = keras.layers.MaxPool2D(padding='SAME')

self.conv3 = keras.layers.Conv2D(filters=128, kernel_size=[3, 3], padding='SAME', activation=tf.nn.relu)

self.pool3 = keras.layers.MaxPool2D(padding='SAME')

self.pool3_flat = keras.layers.Flatten()

self.dense4 = keras.layers.Dense(units=256, activation=tf.nn.relu)

self.drop4 = keras.layers.Dropout(rate=0.4)

self.dense5 = keras.layers.Dense(units=10)

def call(self, inputs, training=False):

net = self.conv1(inputs)

net = self.pool1(net)

net = self.conv2(net)

net = self.pool2(net)

net = self.conv3(net)

net = self.pool3(net)

net = self.pool3_flat(net)

net = self.dense4(net)

net = self.drop4(net)

net = self.dense5(net)

return net

7-3. Performance function

def loss_fn(model, images, labels):

logits = model(images, training=True)

loss = tf.reduce_mean(tf.keras.losses.categorical_crossentropy(

y_pred=logits, y_true=labels, from_logits=True))

return loss

def grad(model, images, labels):

with tf.GradientTape() as tape:

loss = loss_fn(model, images, labels)

return tape.gradient(loss, model.variables)

def evaluate(model, images, labels):

logits = model(images, training=False)

correct_prediction = tf.equal(tf.argmax(logits, 1), tf.argmax(labels, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

return accuracy

7-4. Hyper parameters

learning_rate = 0.001

training_epochs = 2

batch_size = 100

7-5. Define model & optimizer & writer

""" Model """

# sequential, function model 적용시

# model = create_model()

# class model 적용시

model = MNISTModel()

temp_inputs = keras.Input(shape=(28, 28, 1))

model(temp_inputs)

model.summary()

""" Training """

optimizer = tf.keras.optimizers.Adam(learning_rate=learning_rate)

""" Writer """

cur_dir = os.getcwd()

ckpt_dir_name = 'checkpoints'

model_dir_name = 'minst_cnn_seq'

checkpoint_dir = os.path.join(cur_dir, ckpt_dir_name, model_dir_name)

os.makedirs(checkpoint_dir, exist_ok=True)

checkpoint_prefix = os.path.join(checkpoint_dir, model_dir_name)